Products and Services

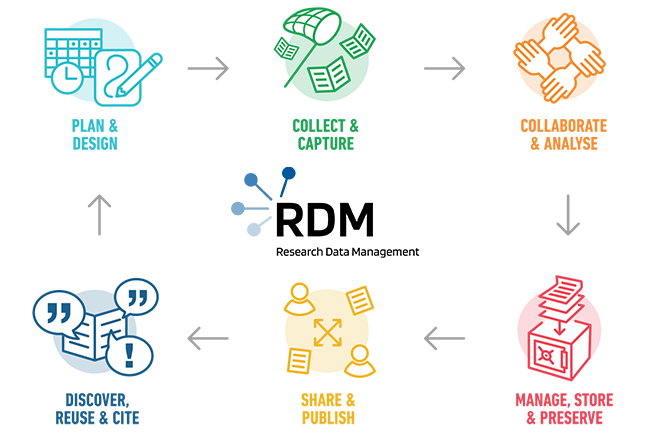

The RDM Team is developing and operating tools and (software-) services for all parts of the data management lifecycle at the UFZ. An ongoing activity is the modernization and modularization of the infrastructure for acquisition, processing and use of research data. The objectives include:

- Support of FAIR principles

- Advance digitalisation and data science methods

- Use of modern technologies and their flexible further development

- Integration of established applications

- Automation of data collection, processing and visualisation processes

- Assignment of Digital Object Identifiers (DOI) to research data deposited in the archiving component of the Data Management Portal

And in addition to the core products and specific services listed below, we also offer training and support in general. For RDM basic knowledge and our RDM trainings please check our RDM Guidelines.

Bild von vecstock auf Freepik

Bild von vecstock auf Freepik

Cross-domain products

The core data management infrastructure at UFZ consists of the Data Management and Data Investigation Portal and the System for automated Quality Control:

The Data Investigation Portal (DRP) provides the opportunity to publicly access the administered data in the Data Management Portal and search them. The presentation is here limited to metadata and non-restricted information. DRP users can thus gain an overview of the data sets and, if necessary, contact the author to gain access to the data.

The Data Investigation Portal (DRP) provides the opportunity to publicly access the administered data in the Data Management Portal and search them. The presentation is here limited to metadata and non-restricted information. DRP users can thus gain an overview of the data sets and, if necessary, contact the author to gain access to the data.

The Data Management Portal (DMP) offers the employees of the UFZ the possibility to manage research data, to ensure its quality and to describe it comprehensively with metadata. Operation and functionality are oriented to the needs of data collectors. Data projects are used for structuring. They combine data sets of different data types and allow the assignment of access and editing rights. The data collected in the Data Management Portal can be researched in the Data Investigation Portal (DRP) and presented publicly or made available as required.

The Data Management Portal (DMP) offers the employees of the UFZ the possibility to manage research data, to ensure its quality and to describe it comprehensively with metadata. Operation and functionality are oriented to the needs of data collectors. Data projects are used for structuring. They combine data sets of different data types and allow the assignment of access and editing rights. The data collected in the Data Management Portal can be researched in the Data Investigation Portal (DRP) and presented publicly or made available as required.

The Data Management Portal supports the administration of the following types of data:

- Time series data

- Sample and analysis data

- Field management data

- File-based archive data.

User manuals and further information can be found in the RDM guidelines.

Quality Control of numerical data is a profoundly knowledge- and experience-based activity. Finding a robust setup is typically a time consuming and dynamic endeavor, even for an experienced data expert.

Our System for automated Quality Control (SaQC) addresses the iterative and explorative characteristics of quality control with its extensive setup and configuration possibilities and a python based extension language. Beneath its user interfaces, SaQC is highly customizable and extensible.

SaQC is an RDM Open Source project publicly available on our GitLab page for SaQC. The documentation can be found here.

Cite SaQC using the DOI: https://doi.org/10.5281/zenodo.5888547

Domain specific data management workflows and infrastructures

These products and services are intended to complement the core RDM infrastructure and enable the integration of data from different areas of environmental research.

BioMe focuses on the development of a new modular web platform that supports the operation of citizen science projects in the field of biodiversity. There are already many projects of this kind that BioMe aims to technically modernize and make more attractive to the participating community, for example:

- TMD - Butterfly Monitoring Germany

- BiolFlor - Database of biological-ecological Characteristics of the Flora of Germany

- LEGATO - Rice Ecosystem Services

- ALARM - Assessing LArge scale Risks for biodiversity with tested Methods

BioMe is a contribution to NFDI4Biodiversity.

Within this project an image data repository based on OMERO will be implemented at the UFZ. Together with the departments phyDiv, BIOTOX and ZELLTOX as well as the iCyt platform we establish workflows for data acquisition by high-content, high-throughput microscopes, storage of image data and metadata in the repository and usage of the (meta)data in data analytic workflows based on KNIME and other machine learning tool chains.

With INTOB-DB we designed a database for recording toxicological effects of various chemicals on organisms. An efficiently designed web application supports the planning and execution of experiments. The centrally and schematically stored data can be further used by analysis tools, machine learning and pattern recognition.

In this project we aim to improve the existing geodata infrastructure at UFZ. In addition to the already established services that mainly use ESRI products, we want to refine the capabilities and promote FAIR management of geodata with an open source software stack, e.g. GeoNetwork, Thredds, GeoNode, GeoServer, MinIO, WIGO. This includes the provision of a state-of-the-art storage infrastructure, tools to support metadata management and cataloging, and automated workflows for data processing, visualization, and publishing.

Grafana is a new software solution for visualizing time series data with dashboards. It has many different types of charts and it can also be used to provide public access to selected data. At UFZ, we provide Grafana as a service that can be used to display data of the logger component of the Data Management Portal and it can also be used in combination with Postgres databases.

An example application is the visualisation of soil moisture time series data of the Swabian MOSES Campaign (german only):

An electronic lab notebook (also known as electronic laboratory notebook, or ELN) is a software to replace paper laboratory notebooks to document research, experiments, and procedures performed in a laboratory.

Chromeleon is a chromatography data system software which combines data collection and processing for measuring devices of different manufacturers. We provide Chromeleon software as a service and aim to simplify measurement data backup and archiving, update and upgrade procedures.

KNIME Analytics Platform is a open source software for creating data science workflows. KNIME Server is the enterprise software for team-based collaboration, automation, management, and deployment of data science workflows as analytical applications and services.

Software and workflows for data pipelines

These tools and services focus on supporting quality control and assurance for data that will be integrated into the broader RDM infrastructure at UFZ and beyond.

For the main field observatories, e.g. TERENO, MOSES, and other observation and monitoring facilities of the UFZ, the RDM team has built quality control piplines that address the individual requirements and data flows of each project. Examples are pipelines for, e.g.:

- Global Change Experimental Facility (GCEF)

- Talsperren Observatorium Rappbode (TOR) (german only)

- Data steams for observatories of the department of Computational Hydrosystems

- Cosmic-Ray Neutron Sensing (CRNS)

In parallel to the development of SaQC, which enables quality control by facilitating the implementation of deterministic tests, the RDM team also explores the usability of machine-learning (ML) algorithms to perform automatic quality control (QC) of data. Soil moisture data from several UFZ observatories are utilized for this use case.

Data infrastructures of the Helmholtz Research Field Earth and Environment

To support the interoperability and integrative use of data products, services, and tools, the centers of the Helmholtz Research Field Earth and Environment are collaborating to build a common data infrastructure within the Helmholtz DataHub initiative.

The development of the Sensor Management System (SMS) is a collaborative effort taken by UFZ and the German Research Centre for Geosciences (GFZ). The system includes a web client, a RESTful API service for managing sensor metadata and a web application and API to manage controlled vocabularies for the sensor metadata management system based on modified ODM2 schema.

Together with the German Research Centre for Geosciences (GFZ) and the Forschungszentrum Jülich (FZJ), the UFZ is developing a platform for discovering and monitoring environmental research data, called Observatory View (OV). One part of this project is the dashboard software Grafana, which is ready to be used. Other components like the APIs for external data integration and a portal for discovering the available data are in development. The MOSES Elbe 2020 campaign will be implemented as a first showcase.

The Datahub Stakeholder View (SV) is a project inititated by German Research Centre for Geosciences (GFZ), Forschungszentrum Jülich (FZJ) and UFZ which bundles and visualizes selected data products of the Helmholtz Research Field Earth and Environment on one website. Target audience of this viewer is the general public (politicians, persons of public institutions, economy and civil society, journalists, interested individuals).